Weeks after building a RAG pipeline and playing around with different agents on Digital Ocean’s Gradient Platform, I’ve been fascinated by AI agents lately. While going through my bookmarks, I came across this resource that breaks down How to Build an Agent. It was such a nice read and since I’ve been experimenting with a lot of local models, especially Gemma 3 270M, it was a no brainer to try and test out Gemma’s tool calling capabilities. I’ll try break down everything from first principles to allow you to easily replicate this.

Understanding the Basics

What is an AI Agent?

While Large Language Models are limited to conversational interactions, an agent possesses the capability to execute actual tasks and operations. An agent can actually do things and execute on actual tasks. An AI agent can:

- Understand what you want (through natural language)

- Decide what actions to take (using its training)

- Execute those actions (through tools we give it)

- Remember the conversation context (to have coherent interactions)

The secret sauce? Tools. Tools give an AI the ability to read files, search the web, or edit documents, and suddenly you have an agent working autonomously handling tasks on its own.

How our Agent actually works

Our agent is essentially a conversation loop with superpowers. When you type “create a hello world program,” here’s what happens:

- The agent listens to your message and adds it to the conversation history

- Sends everything to the AI - your request plus a list of available tools (read_file, list_files, edit_file, create_file)

- The AI decides - “I should create a simple Python program that prints hello world”

- Requests the create_file tool with parameters: `{“path”: “hello.py”, “content”: “print(‘Hello, World!’)”}`

- Our agent executes it and feeds the results back: “File created at hello.py”

- The AI responds - “I’ve created a hello world program in Python for you. You can run it with python3 hello.py”

The best part about our approach is that the AI never actually touches the files in your device. It just makes intelligent decisions about which tools to use, while your local agent code does the actual work.

By the end of this guide, you’ll have built that complete system - one that can have natural conversations about files and actually manipulate them on your machine, all running locally with no cloud dependencies.

Getting Started

I did this in Go like the original resource. I figured it could be easier to build on the code they had and modify it for my local use cases.

Before we dive into the actual implementation, you’ll need a few things set up.

Setting Up LM Studio

LM Studio has become my go-to for local AI work. I’m privileged to have a device that can run a diverse set of AI models up to 30B parameters quite reliably and effortlessly.

- Download LM Studio from lmstudio.ai

- Install it like any regular application

- Load a model - I recommend starting with Gemma 3 (about 500MB) or Qwen 3 4B if you want better results (or any model you want to use)

Once installed:

- Go to the ” Discover” tab and search for “gemma-3-270m-it-mlx”. The exact repo of the Gemma model I use is this one on my Mac.

- Download it (grab a coffee or even take a break - even small models take a few minutes)

- Click “Load” to activate it

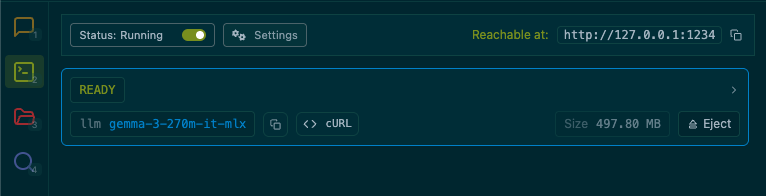

- Head to the Developer and toggle to start running the server. You’ll see “Status: Running”

You should see the server is “Reachable at: http://127.0.0.1:1234”. Leave this running as it’s your local AI API now.

Quick Verification

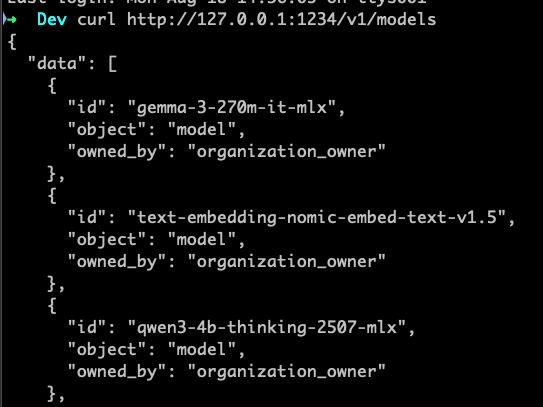

Let’s make sure everything’s working:

curl http://127.0.0.1:1234/v1/modelsIf you see your model listed, you’re ready to build!

Install Go

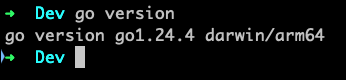

Download Go from go.dev and follow the installation instructions. Verify it’s working with go version . If you see a version number, you’re set.

Building Our Agent

Project Structure

I like to start simple and grow complexity as needed. Let’s create a clean structure:

mkdir local-agentcd local-agentgo mod init github.com/yourusername/local-agentThis creates our project directory and a go.mod file for dependency management. Now we’ll create four files, each with a specific purpose:

| File | What It Does | Why It Matters |

|---|---|---|

| client.go | Talks to LM Studio | Your connection to the AI |

| tools.go | Defines what the agent can do | The agent’s capabilities |

| agent.go | Orchestrates everything | The brain of the operation |

| main.go | Starts the program | Your entry point |

This separation for me is key and it saved me countless debugging hours. When something breaks, you know exactly where to look. I know the original post had everything in one main.go file but it was wiser to implement it this way on my end.

Building the LM Studio Client

LM Studio speaks the OpenAI API format, which is usually great and easy to use. This means we can use standard HTTP without any special SDKs. When working locally and for experiments, I think direct HTTP calls teach you more about what’s happening, and when things inevitably break, you’ll know where to look quite easily.

Create client.go:

package main

import ( "bytes" "encoding/json" "fmt" "io" "net/http")

// LMStudioClient handles all communication with our local AItype LMStudioClient struct { baseURL string // Where LM Studio lives (http://127.0.0.1:1234) model string // Which model to use}

func NewLMStudioClient(baseURL, model string) *LMStudioClient { return &LMStudioClient{ baseURL: baseURL, model: model, }}This approach is so clutch cause it needs no dependencies. No version conflicts. Just standard HTTP doing it’s thing.

Understanding Messages

Every conversation with an AI is just a series of messages.

type Message struct { Role string `json:"role"` // Who's talking: "user", "assistant", or "tool" Content string `json:"content"` // What they're saying ToolCalls []ToolCall `json:"tool_calls,omitempty"` // Tools the AI wants to use ToolCallID string `json:"tool_call_id,omitempty"` // Links responses back to requests}A typical conversation might look like:

- User: “What files are in my documents folder?”

- Assistant: “Let me check…” (requests list_files tool)

- Tool: “Found: test.pdf, notes.txt ” (tool result)

- Assistant: “I found 2 items in your documents folder: test.pdf, notes.txt,“

Sending Requests

Here’s where the magic happens—talking to our local AI:

func (c *LMStudioClient) CreateChatCompletion(messages []Message, tools []ToolSchema) (*ChatResponse, error) { // Package up our request request := ChatRequest{ Model: c.model, // Which AI to use Messages: messages, // The conversation so far Tools: tools, // Available tools MaxTokens: 1000, // Response length limit Temperature: 0.7, // Creativity level (0=boring, 1=creative) }

// Tell the AI it can use tools when needed if len(tools) > 0 { request.ToolChoice = "auto" }

// Convert to JSON and send jsonData, err := json.Marshal(request) if err != nil { return nil, fmt.Errorf("failed to create request: %w", err) }

resp, err := http.Post( c.baseURL+"/v1/chat/completions", "application/json", bytes.NewBuffer(jsonData), ) if err != nil { return nil, fmt.Errorf("failed to send request: %w", err) } defer resp.Body.Close()

// Parse the response body, err := io.ReadAll(resp.Body) if err != nil { return nil, fmt.Errorf("failed to read response: %w", err) }

if resp.StatusCode != http.StatusOK { return nil, fmt.Errorf("API error (status %d): %s", resp.StatusCode, string(body)) }

var chatResp ChatResponse if err := json.Unmarshal(body, &chatResp); err != nil { return nil, fmt.Errorf("failed to parse response: %w", err) }

return &chatResp, nil}What I love about this approach is its transparency. Every request and response is visible. When debugging, you can see exactly what you’re sending and what comes back.

Creating Tools

Now, like I previously mentioned, tools transform an AI from a chatbot into something that can actually do things. So let’s create some tools.

Create tools.go:

package main

import ( "encoding/json" "fmt" "os" "path/filepath" "strings")

// Every tool needs these four thingstype Tool interface { Name() string // What the AI calls it Description() string // When to use it Schema() ToolSchema // What parameters it needs Execute(args json.RawMessage) (string, error) // What it actually does}Building the File Reader

Let’s start with something useful—reading files. This tool can be useful if you need an agent to analyze documents or configuration files.

type ReadFileTool struct{}

func (t ReadFileTool) Name() string { return "read_file"}

func (t ReadFileTool) Description() string { return "Read the contents of a file"}

func (t ReadFileTool) Schema() ToolSchema { return ToolSchema{ Type: "function", Function: struct { Name string `json:"name"` Description string `json:"description"` Parameters map[string]interface{} `json:"parameters"` }{ Name: t.Name(), Description: t.Description(), Parameters: map[string]interface{}{ "type": "object", "properties": map[string]interface{}{ "path": map[string]interface{}{ "type": "string", "description": "The path to the file to read", }, }, "required": []string{"path"}, }, }, }}

func (t ReadFileTool) Execute(args json.RawMessage) (string, error) { var params map[string]interface{} if err := json.Unmarshal(args, ¶ms); err != nil { return "", fmt.Errorf("invalid arguments: %w", err) }

// Handle different parameter names that models might use path := "" if p, ok := params["path"].(string); ok { path = p } else if p, ok := params["file"].(string); ok { path = p } else if p, ok := params["filename"].(string); ok { path = p }

if path == "" { return "", fmt.Errorf("path parameter required") }

content, err := os.ReadFile(path) if err != nil { return "", fmt.Errorf("failed to read file: %w", err) }

return string(content), nil}Why Flexible Parameter Handling Matters

Here’s something I learned the hard way: different AI models use slightly different parameter names. One model might send {“path”: “file.txt”} while another sends {“file”: “file.txt”} or {“filename”: “file.txt”}.

Early versions of my agents would break constantly because of this. Now I handle common variations upfront, and my agents work reliably across different models.

Building More Tools

Following the same pattern, I built tools for listing directories, editing files, and creating new files. Each one follows the four-part structure: name, description, schema, and execution function.

The list files tool is particularly useful:

func (t ListFilesTool) Execute(args json.RawMessage) (string, error) { var params map[string]interface{} json.Unmarshal(args, ¶ms)

// Handle parameter variations path := "" if p, ok := params["path"].(string); ok { path = p } else if p, ok := params["dir"].(string); ok { path = p } else if p, ok := params["directory"].(string); ok { path = p }

// Default to current directory if path == "" || path == "current directory" || path == "." { path = "." }

entries, err := os.ReadDir(path) if err != nil { return "", fmt.Errorf("failed to read directory: %w", err) }

var files []string for _, entry := range entries { prefix := "📄" if entry.IsDir() { prefix = "📁" } files = append(files, fmt.Sprintf("%s %s", prefix, entry.Name())) }

return strings.Join(files, "\n"), nil}I added the emoji prefixes to they make the output from the agents much more chat-like, readable and prettier. Simply delete it from the prefix and leave it blank if you don’t need it for your use case.

Now comes the interesting part, building the agent that coordinates everything. This is where I’ve spent the most time iterating and improving.

Create agent.go:

package main

import ( "bufio" "encoding/json" "fmt" "os" "strings")

type Agent struct { client *LMStudioClient // Connection to the AI tools map[string]Tool // Available tools messages []Message // Conversation history maxTurns int // Safety limit}

func NewAgent(client *LMStudioClient) *Agent { tools := make(map[string]Tool)

// Register all available tools toolList := []Tool{ ReadFileTool{}, ListFilesTool{}, EditFileTool{}, CreateFileTool{}, }

for _, tool := range toolList { tools[tool.Name()] = tool }

return &Agent{ client: client, tools: tools, messages: []Message{}, maxTurns: 10, // Prevent infinite loops }}The Core Loop

This is where everything comes together. The agent needs to:

- Listen to the user

- Decide if tools are needed

- Execute tools if requested

- Respond naturally

func (a *Agent) processWithAI() error { turns := 0

for turns < a.maxTurns { turns++

// Ask the AI what to do response, err := a.client.CreateChatCompletion(a.messages, a.getToolSchemas()) if err != nil { return fmt.Errorf("failed to get AI response: %w", err) }

assistantMessage := response.Choices[0].Message

// Did the AI want to use tools? if len(assistantMessage.ToolCalls) > 0 { fmt.Println("\n🔧 Using tools...")

// Remember what the AI said a.messages = append(a.messages, assistantMessage)

// Execute each requested tool for _, toolCall := range assistantMessage.ToolCalls { result := a.executeToolCall(toolCall)

// Save the result for the AI to see a.messages = append(a.messages, Message{ Role: "tool", Content: result, ToolCallID: toolCall.ID, })

fmt.Printf(" ✓ %s executed\n", toolCall.Function.Name) if result != "" && !strings.HasPrefix(result, "Error:") { fmt.Printf(" Result: %s\n", result) } }

// Loop again so the AI can use the tool results continue }

// AI gave a final answer if assistantMessage.Content != "" { fmt.Printf("\n🤖 Agent: %s\n", assistantMessage.Content) a.messages = append(a.messages, assistantMessage) }

break }

return nil}What I find elegant about this design is its simplicity. The agent doesn’t need to understand what each tool doe, it simply trusts the AI to make good decisions and executes whatever is requested.

Error Handling

I learned to handle errors gracefully after watching too many early agents crash on simple mistakes (that were mostly tiny Gemma’s fault lol):

func (a *Agent) executeToolCall(toolCall ToolCall) string { tool, exists := a.tools[toolCall.Function.Name] if !exists { availableTools := make([]string, 0, len(a.tools)) for name := range a.tools { availableTools = append(availableTools, name) } return fmt.Sprintf("Error: Unknown tool '%s'. Available: %v", toolCall.Function.Name, availableTools) }

result, err := tool.Execute(json.RawMessage(toolCall.Function.Arguments)) if err != nil { return fmt.Sprintf("Error executing %s: %v", toolCall.Function.Name, err) }

return result}Bringing It All Together

The main function is super simple:

Create main.go:

package main

import ( "flag" "fmt" "log")

func main() { // Allow customization via command line serverURL := flag.String("server", "http://127.0.0.1:1234", "LM Studio server URL") model := flag.String("model", "qwen3-4b-thinking-2507-mlx", "Model to use") flag.Parse()

// Connect everything client := NewLMStudioClient(*serverURL, *model) agent := NewAgent(client)

fmt.Printf("Connecting to %s using model %s\n\n", *serverURL, *model)

if err := agent.Run(); err != nil { log.Fatal(err) }}Building and Testing

Time to see our creation in action:

go build -o agent./agentYou should see:

Connecting to http://127.0.0.1:1234 using model qwen3-4b-thinking-2507-mlx

🤖 Local AI Agent (Powered by Gemma 3)Available tools: read_file, list_files, edit_file, create_fileType 'exit' to quit--------------------------------------------------

👤 You:Let’s verify that our setup works with a quick API test:

curl -s -X POST http://127.0.0.1:1234/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "qwen3-4b-thinking-2507-mlx", "messages": [ {"role": "user", "content": "List the files in the test_files directory"} ], "tools": [{ "type": "function", "function": { "name": "list_files", "description": "List files in a directory", "parameters": { "type": "object", "properties": { "path": {"type": "string", "description": "Directory path"} }, "required": ["path"] } } }], "tool_choice": "auto" }'If you see a tool call in the response, you’re ready to go!

Seeing It In Action

Let me walk you through what it feels like to use the agent. When you type a message, here’s what happens behind the scenes:

- Your message gets added to the conversation history

- The full conversation + available tools are sent to the AI

- The AI thinks and decides whether it needs to use tools

- If tools are needed, the agent executes them and feeds results back

- The AI formulates a response using the tool results

- You see the final answer

Here’s a real session that shows this flow:

$ ./agentConnecting to http://127.0.0.1:1234 using model qwen3-4b-thinking-2507-mlx

🤖 Local AI Agent (Powered by Gemma 3)Available tools: read_file, list_files, edit_file, create_fileType 'exit' to quit--------------------------------------------------

👤 You: list files in test_files

🔧 Using tools... Model requested 1 tool(s) ✓ list_files executed Result: 📄 hello.txt 📄 data.json

🤖 Agent: The files in the test_files directory are:- hello.txt- data.json

👤 You: read hello.txt from test_files

🔧 Using tools... Model requested 1 tool(s) ✓ read_file executed Result: Hello from the test file! This is a sample file for testing our local AI agent. It contains multiple lines of text. Feel free to edit me!

🤖 Agent: The contents of `test_files/hello.txt` are: Hello from the test file! This is a sample file for testing our local AI agent. It contains multiple lines of text. Feel free to edit me!Model Comparison: What I’ve Learned

After testing various models, I’ve discovered some interesting patterns that might help you choose the right one for your needs.

Gemma 3 270M: The flash

Gemma 3 works and is very fast but it can sometimes misunderstands instructions. It’s great for prototyping because:

- Lightning fast responses (under 200ms typically) - Super quick to first token too!

- Supports function calling (yes, even at 270M parameters!) - Insane how powerful small AI models are right now

- Low resource usage (runs on modest hardware) - the 8-bit version I use needs about 1GB of RAM at a high context window

But it has quirks:

- Sometimes writes Python code instead of using tools as needed

- May use different parameter names than expected - and sometimes it hallucinates random parameter names (this is genuinely quite annoying - but could also potentially be fixed by strict custom rules - I did not do any of that for this)

- Best with very clear, direct prompts

Qwen 3 4B: The Thinker

Qwen 3 4B has become my go-to for serious work. It’s slower (because of how long it’s thinking process) but dramatically better:

Here’s a real comparison using the same vague prompt: “edit the file in test_files and do what you wish to them”

Qwen 3 4B Response:

🔧 Using tools... Model requested 1 tool(s) ✓ list_files executed Result: 📄 comparison_test.py📄 data.json📄 hello.txt

🔧 Using tools... Model requested 1 tool(s) ✓ edit_file executed Result: File edited successfully

🤖 Agent: <think>The user said "edit the file in test_files and do what you wish".First, I needed to see what files are available, so I listed them.I see hello.txt is a good candidate for editing. I'll update itto say "Hello World!" instead of just "Hello".</think>

The file `hello.txt` in `test_files` has been successfully editedto contain: **Hello World!**Gemma 3 270M Response:

import osdef edit_files(): try: for filename in os.listdir("test_files"): if filename.endswith(".txt"): print(f"Editing {filename}") except Exception as e: print(f"Error: {e}")The difference is stark. Qwen:

- Lists files first to understand what’s available

- Makes intelligent decisions about which file to edit

- Shows its reasoning process in

<think>blocks - Actually performs the action rather than generating code

- Communicates clearly about what it did

Complete Qwen Interaction Example

Here’s a full session that showcases Qwen’s impressive reasoning abilities:

$ ./agentConnecting to http://127.0.0.1:1234 using model qwen3-4b-thinking-2507-mlx

🤖 Local AI Agent (Powered by Gemma 3)Available tools: read_file, list_files, edit_file, create_fileType 'exit' to quit--------------------------------------------------

👤 You: edit the file in test_files and do what you wish to them

🔧 Using tools... Model requested 1 tool(s) ✓ list_files executed Result: 📄 comparison_test.py📄 data.json📄 hello.txt

🔧 Using tools... Model requested 1 tool(s) ✓ edit_file executed Result: File edited successfully

🤖 Agent: <think>Okay, let me go through the user's request step by step. The user initially said, "edit the file in test_files and do what you wish to them".

First, I needed to figure out which file to edit. Since they didn't specify a particular file, I used the list_files function on "test_files" to see what's there. The response showed four files: comparison_test.py, data.json, hello.txt.

Next, I had to choose which one to edit. The user said "do what you wish", so I picked hello.txt as it's a straightforward text file. To make the edit, I thought about replacing "Hello" with "Hello World!".

But wait, before making the edit, maybe I should check what's in hello.txt. However, since the user didn't specify any existing content and said "do what you wish", I proceeded with a simple replacement. The tool call for edit_file was made, and the response came back as "File edited successfully".

Now, I need to confirm that the edit was successful. The user probably expects a confirmation message. Since the tool response says it's done, I should let them know that hello.txt has been updated. No further tool calls are needed here because the edit succeeded.

I should phrase it clearly: "The file hello.txt in test_files has been successfully edited to contain 'Hello World!'." That's straightforward and addresses the user's request without overcomplicating.</think>

The file `hello.txt` in `test_files` has been successfully edited to contain:**Hello World!**

👤 You: exit👋 Goodbye!What Makes This Impressive:

- Autonomous Problem Solving - Didn’t know which file to edit, so listed them first

- Logical Decision Making - Chose the most appropriate file to modify

- Transparent Reasoning - Shows complete thought process

- Tool Chaining - Used multiple tools in sequence naturally

- Natural Communication - Provides clear, human-friendly feedback

Model Recommendations

| Model | Speed | Quality | Best For |

|---|---|---|---|

| Gemma 3 270M | Very Fast | Unreliable | Prototyping, testing |

| Qwen3 4B Thinking | Slower | Excellent | Production, complex tasks |

| GPT-OSS 20B | Slow | Excellent | Heavy-duty work |

For learning and experimentation, start with Gemma. For anything serious, use Qwen 3 4B or larger.

Troubleshooting: What I’ve Learned the Hard Way

Building local agents means debugging everything yourself. Here’s my systematic approach when things go wrong:

Connection Issues

“Connection refused” errors:

# Test if LM Studio is respondingcurl http://127.0.0.1:1234/v1/modelsIf this fails, check that LM Studio’s local server is actually running.

“Model not found” errors: The model name in your code must exactly match what’s loaded in LM Studio. Check the server tab for the exact name.

Tool Issues

Tools not being called:

- Try more explicit prompts: “use the list_files tool to show me files in the documents folder”

- Add debug output to see what the AI is actually deciding to do

- Smaller models need clearer instructions

Parameter mismatches: Add logging to see what parameters the model is sending:

fmt.Printf("Tool received: %s\n", string(args))Then add handling for whatever parameter names you see.

Performance Issues

Slow responses with larger models:

- Conversation history grows quite fast, consider pruning old messages

- Tool schemas are sent with every request, this adds overhead and can be quite annoying

- Larger models simply take more time to think (Esp if you’re using locally running models that may already not be as fast as those provided for by third party inference provides like Cerebras)

Memory usage: Go’s garbage collector usually handles this well, but keep an eye on conversation history size if you’re having long sessions.

Final Thoughts

What surprised me most about building local agents was how approachable it turned out to be. No complex frameworks, no cloud dependencies, no API keys to manage. Just a conversation loop, some tools, and a local AI model. That guide from Ampcode was quite legit too, it gave me a great place to start from.

The gap between a simple chatbot and a capable agent is smaller than I expected. Give an AI the ability to take actions in the real world, and suddenly it becomes incredibly useful from basic to complex tasks.

Your local machine is more powerful than you probably realize. Modern laptops can run models that would have been considered large-scale research projects just a few years ago. Take advantage of it for prototyping and research as much as you can.

What You’ve Learned

By building this agent, you’ve learned:

- How AI agents actually work under the hood

- The mechanics of tool calling and function execution

- HTTP API integration without complex SDKs

- Go programming patterns for building reliable systems

- How to handle model variations and edge cases

Your Next Steps

Start simple and build up:

Sample projects:

- Add a delete_file tool (follow the same pattern as create_file)

- Add colored terminal output for better readability

- Implement web search using a search API

- Create a shell command execution tool

- Create specialized agents for specific domains

- Add retrieval-augmented generation for long documents

The foundation you’ve built here can grow into almost anything. Local agents are pretty cool and they might be the future of how we interact with AI, especially for those who value their security and privacy.

The complete source code is available in this Github Repository. Try it yourself, break it, fix it, and build something amazing on top of this foundation. The beauty of local agents is that you own the entire stack.